Computing power at the device level is transforming process applications

Find out more about edge computing in this episode of the Control Amplified podcast featuring Peter Zornio, CTO at Emerson Automation Solutions.

It's this portability—the fact that microprocessors can perform their calculations almost anywhere—that gives edge networking, computing, monitoring, automation and control its true value. For awhile, it appeared that all production information was on its way to the cloud, but those services and their developers and users have since realized there was far too much data for them to handle, while many networks still face connectivity, latency and reliability hurdles.

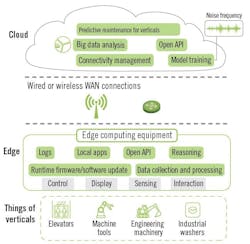

Fortunately, edge computing in or close to sensors, instruments, analyzers, I/O, controls and other plant-floor devices is enabling routine data gathering, storage on databases and latency-reducing data processing in many process applications, while also delivering less burdensome reports-by-exception, anomalies and longer-term trends for analysis to the cloud and other enterprise-level users (Figure 1).

Figure 1: Several of the primary players on the edge, their functions, and some of the equipment they can monitor and control are compared to more cloud-based functions in the "Introduction to edge comuting in IIoT" whitepaper by the Industrial Internet Consortium's (IIC) Edge Computing Task Group.

Beyond distributed

"The edge means using general-purpose computing power as close as possible to the physical world where data is generated," says Benson Hougland, vice president of marketing and product strategy at Opto 22. "This is different from the distributed control of the past because, while we're moving the same decision-making ability to the edge, distributed control was run by a large CPU that managed all its nodes, but had less overall horsepower, and had to rely more on critical networking connections. Edge computing spreads its intelligence, CPUs and memory more widely, can operate more autonomously, preprocesses information, and can initiate communications to publish and get data where it needs to go."

For instance, Plummer's Environmental Services in Byron Center, Mich., collects and disposes of non-hazardous, liquid waste, usually containing detergents and solvents from industrial cleaning and degreasing applications. However, its operators recently had difficulty pumping from their tanker truck to Plummer's 65,000-gallon holding tank because the only way to tell if there was enough room was to visually inspect it, which led to misestimates, spills into a containment area, and costly cleanups.

As a result, Plummer's enlisted system integrator Perceptive Controls in Plainwell, Mich., which implemented an ultrasonic level sensor at the top of the tank, and connected it to a SNAP PAC R-series controller from Opto 22. It triggers alert lights, alarms horns and emails when the tank reaches capacity, while an Internet protocol (IP) camera mounted at the top provide a real-time view of the tank level. Opto 22's groov mobile interface make tank level data and live video available on operators' smart phones and tablet PCs. Subsequent project phases were expected to add pump controls and automatic shutoffs, as well as adding real-time tank monitoring at clients' holding tanks for dispatching trucks before they could fill and possibly hinder production.

Steffen Ochsenreither, business development manager for products and solutions including IIoT, Endress+Hauser, adds, "There are many similarities between distributed control and edge computing, such as remote connectivity and decentralization, but we believe they're not exactly the same because distributed control is always supervised. While the edge is just doing monitoring now, it's future will be autonomous control by local intelligence that runs operations, and synching with supervisory systems periodically via message queuing telemetry transport (MQTT) or narrow- and low-bandwidth, long-range, wide-area network (LoRaWAN) wireless."

Definitions and databases

Once initial exposure to edge computing indicates how it might be applied on the plant floor, potential users seek to fit its concepts into their industries. However, because so many edge devices like network gateways and even their microprocessors come from the information technology (IT) side, many process industry users are learning its language, as well as developing their own lingo to describe how those functions are deployed in their applications.

"As we get more involved with digital transformation, we're seeing a new vocabulary emerge for it," says Peter Zornio, CTO at Emerson Automation Solutions. "For example, an edge gateway used to be just a gateway, while the Internet of Things (IoT) is really just SCADA on the Internet. However, as the IT community discovered IoT, it started coming up with sexier terms for what we'd already been doing in process automation for 30 or 40 years. These terms are used to explain operations technology (OT) to IT's bigger audience and community that aren't familiar with it. Even the term "OT" that describes technology and systems at the plant level was created for this transition because we didn't have it before. So now the "edge" is where process data, analytics and results are done at the OT layer, rather than sending it to a control room, off premise or to the cloud. As a result, edge computing is another new generation of plant-level process control system (PCS) for taking data from sensors and automation systems, and using it to generate new, valuable, action-oriented information, such as energy consumption for optimization, reliability and failure prediction, and better process safety."

To contribute to increasing edge efforts, Emerson's PlantWeb Insight software presently runs next to sensors and wireless gateways, but Zornio reports it will soon run inside those gateways, where it will continue to do jobs like predicting and reporting pump performance, advising users when to clean equipment, and alerting about upcoming failures. "It's just a choice of where do you want to run your data processing? This usually depends how much latency you're prepared to accept because maybe your application needs fast analysis of its vibration data," adds Zornio. "This is why Emerson's solutions can run at the control data center or a data center at an integrated operations center. We'll always have a natural split between what happens at the edge or in the cloud, but I don't think process control is going to leave the edge. It will still be done at the plant or device level, even as indirect, high-level analysis happens elsewhere."

Organizing at the precipice

One snag with the edge computing idea is it's hard to decide what's on the edge or not. "From the cloud computing perspective, everything outside the cloud is on the periphery," says Bob McIlvride, communications director at Skkynet (www.skkynet.com). "However, from the control room's point of view, everything in the plant is on the edge. Even on the plant floor, there's an edge—the sensors and devices that interact with the physical world. To us, edge computing simply means putting computing power as close to the source as possible, and filtering and regulating the amount and quality of data that goes to the cloud."

Everyone has to think of themselves at the center it seems. However, once enough edge-related concepts, terminology and comparisons have been at least partly agreed on, developers and users report that edge computing it starts to impact the basic structures of their plant- and device-level systems.

"Edge computing also enables a more loosely coupled architecture, which allows users to build solutions that are unique to the needs of their applications," adds Opto 22's Hougland. "The issue is always network latency, but now much of it can be taken out because not every reading has to be sent to a master, and the edge device can look at the data points and send back just what's needed. This also helps security because tightly coupled architectures use dumb, distributed devices that aren't secure by definition, while smarter edge devices can decide who gets to connect according to what parameters. This is like comparing an old telephone on the wall to a smart phone, which is the classic example of an edge device that's mobile, has more memory, an array of built-in sensors, and can deliver computing power and run software including PLC programs and custom code wherever needed.

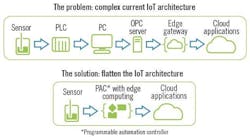

"The edge flattens the usual, stitched-together architecture of HMIs, gateways, OPC UA, historians/databases and other tasks into one thing (Figure 2). A regular PC can do general-purpose computing, but then users must pay to manage, license, maintain and upgrade it. Once they get an edge device doing these jobs, users are saying, 'Hey, I can get rid of my PC," plus they're also easier for IT to manage. Just as many people are using their smart phones and other mobile device more than they're laptops and other PCs, the same is happening in process automatione

Figure 2: An essential advantage of edge computing is it can collapse several layers of traditional control and communication networks, such as PLCs, PCs, OPC servers and edge gateways, and combine them into one streamlined, optimized and secured architecture that can input physical signals and output IoT-ready data with TCP/IP, HTTP, MQTT and RESTful APIs, according to the "Edge Computing Primer" whitepaper by Opto 22. Source: Opto 22

Travis Cox, co-director of sales engineering at Inductive, adds that, "Edge computing can be broken up into three categories with different purposes. The first is using edge devices to bridge gaps between legacy networking protocols and fieldbuses to newer, publish-subscribe ones like MQTT. Edge devices can be embedded PCs like those from Advantech, gateways like Opto 22's groov, Raspberry Pi boards, or even fog computing software running switch or router functions. There's so much legacy equipment and protocols out there that they can't all be replaced, so gateways and other edge devices are critically important."

Cox adds that adopting an open-standard, publish-subscribe protocol like MQTT creates an enterprise service bus for the industrial world. "This is a common area that also allows auto-discovery of data without mapping and without having to know whose end devices are used," explains Cox. "This allows plug-and-play and fog computing. For example, the legacy equipment needed to talk to 1,000 oil and gas wells would include a complex polling engineer, 900-MHz telemetry, and slow polling devices from the control system. However, Freewave is now providing its 900-MHz devices with fog computing, which means if PLCs and radios are already at the wells, then the user can run SCADA software such as Ignition, talk to their PLCs and poll them locally, store-and-forward information, publish data by exception, eliminate their legacy polling engine, not need to add a PC at each well, and get more useful data faster. "

Cox's second edge computing category is local operating interfaces that can run with minimal hardware, which allows more local HMIs and greater production visibility, especially in case of network failures. "Previously, HMIs were physically wired to PLCs, but this was costly, usually dedicated to only one purpose, and users didn't like it because they couldn't add different software to any device like they can with apps on tablet PCs and smart phones," Cox explains. "This is why our Ignition Edge Panel is low cost and allows unlimited tags, so users can put an HMI anywhere. But it isn't just an island because it can network back to a corporate or central control system to send and manage data. This common, standard software and networking like MQTT is a big shift from proprietary because users can now add other software without having to change their infrastructure."

Thanks to joining network protocols and enabling local interfaces, Cox adds his third edge category is the huge return on investment (ROI) and value it can deliver by running complex algorithms, models and machine learning close to where production data is generated. "This means local analytics enabling instant tuning of processes using more sophisticated models, better production forecasts, and improved equipment failure model and predictions," says Cox.

Lessen latency

Once edge computing is organized and starts processing data on the periphery, its primary benefit is revealed as reducing network latency because so much less information has to be sent up and back from distant enterprise and cloud levels.

It's hard to get more on the edge than out in the ocean, but that's where edge computing devices can really prove their worth. For example, among its operations in the Austral and San Jorge basins, state-run Empresa Nacional del Petróleo Argentina operates five offshore oil and gas platforms near Magallenes. These production platforms report data from close to 146,000 tags, measurement points and 69 devices running Modbus RTU, Modbus TCP and other protocols to five individual servers and a local supervisory server, which retransmits the data to a remote server onshore at ENAP's Magallenes Reception Battery (MRB). The operation also includes 15 clients, 80 screens, six MySQL servers and one SQL server.

However, due to bad weather, communication between the platform-based servers and the MRB is sometimes interrupted and data is lost, according to Gabriel Alejandro Acuña, electrical engineer at Weisz Bolivia SRL, which helped ENAP resolve its network issues. "All control points were isolated during these interruptions," said Acuña. "The supervisory staff had difficulty reviewing the information, and the management staff was totally isolated in obtaining information."

In a phased upgrade, Weisz and ENAP integrated the controls at the five platforms with web- and Java-based Ignition SCADA software from Inductive Automation. Each Ignition server was also given its own, independent database, enabled "transaction groups" to send data, and was configured with a hub-and-spoke topology to help ensure delivery. The Ignition server has double redundancy, so the SCADA system won't be out of service when a server is being maintained.

"On the ground, a cluster of three servers was installed," explained Acuña. "These host a virtualized Ignition server, which contains the 'supervision' application that allows navigation between all applications from any location in the corporate network. This server has two projects, one for controlling the reception battery, and another application that allows supervisors and management personnel to navigate through all the applications at sea and on land as their user level allows. This configuration allows personnel in Buenos Aires to access information in Rio Gallegos.”

Besides improved availability and better monitoring and control of the five platforms and MRB, Acuña reports that Ignition's more capable and independent servers also let ENAP access corporate information in real-time; publish according to requirements of Argentina's Secretariat of Energy; generate reports for analytics required by other ENAP departments; access more asset management data; perform event auditing to register all accesses and modifications to the SCADA system; and move between applications without changing users.

To assist these edge initiatives, Weber adds that Rockwell Automation recently released its 1756 ControlLogix controller/compute module that can perform data processing in its automation backplane, and run Linux or Windows operating systems. It's also about to launch its 5480 CompactLogix controller with four Intel Core microprocessors, including two running Linux or Windows, which will enable users to develop their own applications, as well as secure data or send instructions to the controller side. Weber adds that users can likewise build and run applications on Rockwell Automation's recently released its FactoryTalk Analytics Edge software. [Go to this issue's Product Exclusive section for more about 5480 ControlLogix.]

"If you're thinking about deploying an edge architecture, you have address the infrastructure it will need, which means assessing existing devices and networking," adds Weber. "You also have to decide where your computing surfaces will be. Will it sit right where the production equipment is? If so, what data speed will it need, and can it deliver values where needed? All this really begins with picking a problem you want to solve, defining it, determining if edge computing can really help, learning how to add its architecture, and making it secure. Artificial intelligence (AI) will help with this, as well as finding, organizing and publishing data."

Likewise, Tom Buckley, IoT global business development manager at Iconics, reports its newly revised IoTWorx software for edge applications is a subset of its Genesis 64 cloud-on-premise application. "IoTWorx employs Windows 10 IoT Enterprise operating system and Microsoft Azure Edge runtime, which lets us put our software into software-based Docker containers in edge devices," explains Buckley. "These containers divide applications into software components, which allows better maintenance and scalability. IoTWorx's next release in 1Q19 will let it run on Linux, which will let us address even smaller devices."

Buckley adds that once edge devices and software are in place, their analytics can monitor oil well pads and other remote sites that can't have separate, full-sized control systems in each location. "This means better predictive maintenance," says Buckley. "For instance, IoTWorx's analytics can do fault diagnostics by monitoring the data from sensors with preconfigured setpoints and thresholds. If one of these is exceeded, a fault event is triggered, real-time notifications are generated for staff, and based on the event, users can even decide beforehand if an the edge device's initial response should include initiating a safe shutdown to prevent a potentially catastrophic event."

Wireless extends edge

Of course, just as it helps other networks make previously unworkable leaps, wireless is also helping edge computing devices, Industrial Internet of Things (IIoT) applications and cloud services reach components, signals and data they couldn't acquire otherwise.

For instance, to reduce overhead costs and increase efficiency, safety and reporting accuracy at the same time, Fairfield Oil and Gas Corp. in Oklahoma City, Okla., recently worked with Freewave Technologies Inc. to implement its ZumDash Small SCADA app in conjunction with Amazon Web Services (AWS) cloud-computing service. ZumDash uses Freewave's ZumIQ application environment platform, and is deployed on its app-hosting and app-programmable ZumLink 900 series radios. Together, they allows users to monitor operations remotely, execute logic, visualize trends and generate reports, which in Fairfield's case means minimizing trips by vehicle for manual sensor inspections, which can exceed $20,000 per year.

Initial implementation by Fairfield included automating and monitoring of five wells, each with a maximum of three tanks and three pressure sensors. The edge solution used had to be vendor-neutral because it was too costly for Fairfield to remove its existing sensors and meters. Alarms, tank-level and pressure sensor data had to be accessible from web-enabled devices. Fairfield also wanted to:

-

Access and control production from any device;

-

Automate reporting with 24/7 access to real-time reports, daily reporting of pumper accuracy vs. automation accuracy, monthly reports of run tickets vs. production runs, and annual reporting of real-time decline curves;

-

Implement proactive maintenance by identifying problems before they occur;

-

View historical data and trends;

-

Automate alarms and alert protocols to web-based PC, Mac, Androids or iOS devices, and provide: high-level alerts to crude purchasers, no-flow alerts after six hours of no flow, sudden drop alerts due to tank leaks, thefts or purchaser pick up, separation alerts of high-bottoms due to too much water in crude vessels, high-pressure alerts, and flow alerts due to increases, decreases or stops.

To avoid disrupting any of Fairfield's operations, Freewave's software engineers also set up a Modbus-based simulator to validate the ZumDash solution as part of the development process. Next, a 900-MHz ZumLink Z9-PE radio was added at each well to pull in sensor data from their existing OleumTech gateways via Modbus, and deliver via cell modem. Each Z9-PE radio was pre-loaded with ZumIQ software for full app-programmability, and has 512 MB of RAM and 1 GG of flash memory that can store up to 30 days of Fairfield's data.

In addition, with a ZumIQ-developed app, each radio also converts Modbus data to MQTT for publishing real-time data to AWS in the cloud. Because status and trend data from the wells is now visible and accessible on web-based devices, staff trips to the sites were significantly reduced, as well as the cost of sending every data packet to a PLC network or the cloud. Finally, to reduce broadband and other data expenses, each Z9-PE also has ZumIQ logic that send alarms to AWS and its app tools for distribution if process control limits are exceeded, and delivers them to credentialed staff when certain well conditions are met. Fairfield expects that real-time alarms aided by ZumDash will minimize spills that cost $15,000 each in remediation fees.

Brian Joe, global product manager for Emerson's wireless group, reports that its PlantWeb Insight software can run on standalone, on a server or in an edge gateway; take data wirelessly from multiple pressure, temperature, level, vibration and others sensors; and run it through analysis models to give user better data for more-informed decisions. "This type of data has been gathered for decades, but most of it was done manually and periodically, so it often wasn't consistent or reliable, and couldn't improve decisions," says Joe. "Especially with wireless, we can add far more data points and monitor them continuously. Instead of getting one measurement per month, we can now get hundreds per hour, and get much better trends and analysis."

Joe adds that 3M recently implemented PlantWeb Insight for steam traps and PlantWeb Insight for pumps at its chemical plant in Decatur, Ala., which use pre-engineered algorithms to analyze asset health, and alert staff to upcoming equipment issues. Staff at 3M implemented the software on five problematic steam traps at its boiler house, and it immediately alerted them to four failed traps, representing $100,000 in added energy costs of they hadn't been repaired. Likewise, 3M added PlantWeb Insight to two chiller water pumps, and now gets continuous measurements and reliable, consistent, holistic data on their health, which allows the company to reduce costly manual rounds, while staffers view their condition on an intuitive dashboard.

"Users can run edge devices and software close to their processes, and get results in minutes or seconds, instead of hours or days," adds Joe. "This lets them identify abnormal situations as they occur. For example, heat exchangers get mucked up, so they're usually cleaned every six months. Now, we can recognize their typical fouling rate to develop an algorithm, and use daily temperature, flow and pressure data to determine more accurately if they need to be cleaned or not, and send alerts when they do. This can save a lot on power and other costs."

Monitoring moves to control

While most edge initiatives presently focus on monitoring and moving data, some are already pushing to allow increasing degrees of control.

"Cloud computing to most companies means sending data to the cloud where it's stored and analyzed, but not trying to do control from the cloud. Skkynet is different," says Skkynet's McIlvride. "Our DataHub software runs in the plant, aggregating all kinds of data and mirroring it in real time to our SkkyHub cloud service, allowing bidirectional communication. That means instructions can be emitted from the cloud for closed-loop control. The main benefit of edge computing is reducing data overhead and making the best use of available network bandwidth, while responding quickly to events in the plant. This doesn't mean users have to keep lower-level control just on the devices. We see both the edge and the cloud as essential to robust and effective IIoT."

Even though gateways and other edge components are increasingly easy to use, it still requires a learning curve to migrate from legacy networking to devices that are simpler and smarter, but still represent a substantial change for individual applications. Tom Buckley, IoT global business development manager at Iconics, has several suggestions for getting edge devices up and running, and giving them the best chance for success:

- Enlist OT and IT personnel to communicate and collaborate on any edge project. OT knows the process application and its requirement, while IT likely knows the most about which edge devices to use.

- Develop requirements and specifications with input from everyone, including latency of the application, whether real-time response is needed, and adding time to reach the cloud and back in latency calculations.

- Decide what information needs to go to the cloud and other enterprise levels, and on what schedule it needs to be sent.

- Determine what specific types of support technology will meet the project's specifications, such as wired or wireless, or will connecting to sensors be done via Bluetooth, radio frequency or local wiring?

- Because device drivers are still needed to reach many legacy components, check if planned edge devices need gate translators for protocols like OPC UA, BACnet, SNMP and others.

Daniel Quant, strategic development vice president at Multi-Tech Systems Inc., adds the edge of the network resides in the final assets, but the architecture of PLCs and DCSs reporting to local SCADA systems that normalize their data—though highly desirable for asset-heavy, mission-critical networks such as oil and gas and utilities—is giving way to cloud-computing services like Azure, IBM and AWS. "Existing DCS or SCADA systems are sending data to these big data AI services, but it's generally one-way, out of the SCADA network, to provide big data modelling of historical performance, such as demand response. It's like adding another layer to the onion," says Quant. "However, greenfield applications or other new equipment will just talk to the cloud directly. Tank monitoring at a well site, pipeline leak detection, or utility transformer and capacitor bank control are great examples of how an IIoT architecture is well suited to distribution automation solutions."

As a result, Quant reports that all of the intelligent platforms that MultiTech has developed over the past five years have steadily added memory and made programmability easier for developers and users alike. For example, having the IBM Node-Red development environment built into its industrial programmable gateways lets users perform their micro data services at the edge without having to be experts in a traditional programming languages. "Doing hard coding at the edge isn't workable for everyone to connect process-oriented power, operating systems and silo applications that need to be maintained and updated," explains Quant. "Previously, DCS and SCADA addressed all the pain points in the middle, but this often meant users had to do it manually with a small group of highly skilled engineers. Now, developers want speed of development at the application layer without having to get stuck in the weeds of the network layers or complex security architectures. Meanwhile, users want their applications to be more transparent and accessible to cloud and AI via secure wireless IoT that can deliver on these tasks."

Quant adds that MultiTech further aids edge applications with inexpensive, long-range, wide-area network (LoRaWAN and LTE-M/NB1) Arm Mbed OS programmable wireless modules, which can send data from high layer APIs and perform provisioning, activation and lifecycle management, and do it securely with secure-key management and LoRaWAN-based or SIM encryption, all essential to deploy and scale end point deployments in volume over a more than 10-year lifespan. "This lets them move compute power to the edge to deliver actionable data to higher-up platforms for centralized analysis and model building. Creating assets with intelligence at the edge that have processing power, memory and operating systems that can programmed from a higher level is a must to scale up to for billions of devices being deployed for IIoT," says Quant.

Data centers move down, too

Logically, once some basic computing functions make their way to the edge, it's not long before even more and larger data centers show up there, too.

"Over the last 10 years, IIoT has been adding gateways between sensors, embedded devices and back-end servers for protocol translation, data aggregation and filtering before the data is pushed to a data center at the enterprise or cloud level for analysis and use by other business applications like MES. However, as more and more sensors and embedded devices are added, traditional aggregation isn't enough," says Shahram Mehraban, vice president of marketing and product management at Lantronix. "At the same time, gateways are getting more powerful and adding control functions. Usually, gateways only do simple pattern matching analytics, but now they're doing complex event processing, applications processing and some control and actuation functions.

"For instance, some utilities are doing smart metering, which begins with simple remote meter reading, but can quickly progress to demand-response programs like Southern California Edison's (SCE) that gives discounts to home owners in exchange for some limited control of customers' Nest thermostats. These and other edge applications need local, micro data centers, but instead of the PC with a SCADA program they used previously, they're now using Intel based servers with Cisco switches to aggregate all their residential data, adding real-time weather forecast information, analyzing it all, and taking action like turning those Nests on and off during peak times"

On the plant floor, Mehraban reported that sensors tracking temperature, vibration and other parameters are gaining many Nest-like capabilities thanks to their own onboard microprocessors, so they also need micro data centers, which are typically small, rack-mounted units with four servers or less, use Cisco switches, and need some cooling and power. Lantronix makes a remote management solution for data centers, Secure Lantronix Console Manager (SLC 8000), which allows for out-of-band access to critical networking equipment via a secondary network such as cellular.

"We're seeing more and more data center devices deploying at the edge," says Mehraban. "For example, SCE has been using SLC for years, but now as they are deploying micro data centers in their unmanned substations, they are also deploying SLCs so that they can reach them remotely, and try to bring them up if and when there's a network outage."

To implement edge computing and micro data centers, Mehraban recommends that users:

-

Determine the use case for the edge application, such as the size of its data requirements, including its total data points and how often they'll need to communicate, what types of analytics will be needed, or if it has a control loop that needs to be deterministic;

-

Investigate power requirements for cooling and other tasks, and recognize that real-time applications will need more power, while those with many more data points will need more storage;

-

Evaluate if the application is mission-critical and/or safety-related, and decide if fault-tolerant or redundant capabilities are required;

-

Examine where is the optimal place for the computing to be performed. Does it need to be close to the control room or enterprise? Can it be remote or unmanned? If so, how will remote access be achieved?

-

Construct an analytics model that addresses how data will come in, how fast decisions needs to be made, and what will be done with the analytics insights. Again, the model needs to serve the use case.

Aids, aided by IT-OT convergence

One unexpected benefit of edge computing is that it can apparently enable OT and IT professionals to collaborate more effectively.

"Edge devices bring IT and OT together for common goals like predictive maintenance because the edge's field devices push more data to higher-level system more often," says Endress+Hauser's Ochsenreither. "Much of this data was already in many instruments, but it was locked in, and could only be downloaded manually. Plus, users would have to print out codes, look them up in manuals, learn what to do, and go back and make adjustments. Now, we have edge devices like our SGC 500 gateway that can get more and better information automatically. The process still runs as before, but now users have in-depth diagnostics, and users can learn what needs to be done before they go out. SGC 500 does analytics, health diagnostics and predictive tasks, but all it software applications use data from other edge devices. It's been available in Europe since last June, and is coming to the U.S. in the first half of this year.''

Similarly, because remote and/or limited-connectivity operations must prioritize the data they send to the enterprise or cloud, Endress+Hauser also recently developed its Smart System, which combines sensing, measurement and gateway functions to efficiently collect, aggregate and store information before sending it to the cloud, according to Ochsenreither. It was released in Europe just over a year ago, and is expected to be available in the U.S. soon. "Smart System measures continuously, and users decide whether it updates every 15, 30 or 60 minutes," says Ochsenreither. "It also takes a snapshot of data just before sending it to the cloud, and checks the diagnostics of connected sensors and reports on their health or if anything is wrong."

[sidebar id=6]

Step up, jump in

Getting involved with edge computing and automation is designed to be relatively simple and straightforward, but it does require redefining some traditional relationships with legacy devices and networks—and the willingness to change some mindsets, too.

"Data processing is heading towards more distributed hardware and software, where users don't have to stick with one vendor, but can instead employ best-in-class devices," says Inductive's Cox. "This means leveraging open, secure standards like OPC UA, MQTT and RESTful API. This is different than networking via EtherNet/IP and Profinet, and is more like the Internet where HTML is transmitted over HTTP. This is where open-standard, plug-and-play networking can unlock some real power for the industrial world. For instance, MQTT is like HTTP because it uses Sparkplug messaging that defines data before it's transferred. This is what allows two different systems to access and understand data from each other, and know whose devices are being used and what their data means without the mapping that used to be required.

"Anyone can say their network protocol is open, but if it's not truly interoperable and easy to understand, then it's not open. Openness means plug-and-play access, inspection and data transfers between two systems or devices that doesn't require users to write mapping and translate protocols. This is what's enabling data science, machine learning, and building and applying models to be combined with operations. This is where real IT-OT convergence happens."

About the Author

Leaders relevant to this article: