This Control Talk column appeared in the January 2021 print edition of Control. To read more Control Talk columns click here or read the Control Talk blog here.

Greg: We have here an exceptional example of how a university is improving the practical use of existing tools for process control and optimization such as model-predictive control (MPC) and real-time optimization. These well-established methods are being supplemented with new technologies to provide more comprehensive and reliable performance as well as facilitate better understanding and maintainability.

John Hedengren introduces us to what’s possible, and a course that provides hands-on opportunities to learn and be part of advances in technology. John leads the BYU Process Research and Intelligent Systems Modeling (PRISM) group at Brigham Young University (BYU) in Provo, Utah, with interests in combining data science, optimization and automation with current projects in hybrid nuclear energy system design and unmanned aerial vehicle photogrammetry. He earned a doctoral degree at the University of Texas at Austin and worked five years at the ExxonMobil Chemical Baytown, Texas, plant and central engineering group for advanced control applications prior to joining BYU in 2011.

John, what preparation is required to take your course?

John: Motivation is the most important pre-requisite for the Process Dynamics and Control Course, especially with many other good online resources that can fill in knowledge gaps. There are about 100 students that take the course each year at BYU. About nine years ago, I started posting my content online and now there are 10,000 viewers each day. With the shift to online learning in 2020, many others are also posting content online. Some of the viewers are university students and some are professionals needing information to solve a specific problem. One of the things that is intimidating for some students is the programming. My courses use MATLAB or Python. There are introductory short courses (2-3 hours) for those that need help to get started.

Begin MATLAB | https://apmonitor.github.io/begin_matlab

Begin Python | https://apmonitor.github.io/begin_python

To make sure I didn’t skip any steps, my teenage son helped me prepare a first draft of the Python course. He had just taken an introductory Python course and did a very good job explaining programming to a beginner with many hands-on exercises. I wanted a beginner to prepare the first course because I’ve been programming for many years and needed the perspective of someone starting for the first time.

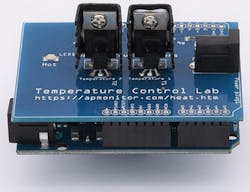

Figure 1: Abe Martin, a graduate research assistant at BYU helped create an Arduino-based Temperature Control Lab that is now used by many professionals and 70 universities with 7,000 units produced so far.

Greg: What are some of the ways process control education is changing?

John: A 2017 study by the American Institute of Chemical Engineers sponsored by the National Science Foundation called for new emphasis on process safety, applied statistics, process dynamics and applied process control through new teaching materials and effective integration into the curriculum. This is in response to some universities dropping the process control requirement or absorbing the control course into a unit operations lab to make room for other topics. Industrial feedback is that students need these topics and that universities should find new ways to teach practical process control. Instructors have responded to this challenge by creating hands-on learning experiences for the dynamics and control course with physical labs.

A graduate research assistant at BYU (Abe Martin) helped create an Arduino-based Temperature Control Lab (Figure 1). Jeff Kantor at Notre Dame, Carl Sandrock at the University of Pretoria, and Samvith Rao at MathWorks helped with the software interface. Many others have helped with problem statements, language translation, and given feedback on their control course. The community effort has had positive impact on process control education and is used by many professionals and 70 universities with 7,000 units produced. Process control education is changing to supplement theory and math with hands-on learning labs. It is also changing with more online resources for professionals who need just-in-time continuing education.

Greg: How are physics-based models, which I call first-principle models, developed and used?

John: Physics-based models come from engineering principles such as material and energy balances. It is exciting for students to discover that the physics-based equation solutions can agree with step-test response data. Commercial physics-based simulators are also useful for generating the dynamic data for step-test regression but rarely expose the underlying equations. Physics-based equations give students a simplified fundamental understanding such as the approximate time constant for a process or an approximate gain. Students see the effect of process design decisions on the controllability of the process. Physics-based understanding is also important to understand results from empirical tests.

A few years ago, we were switching the method to regulate the pressure of a gas-phase polymer reactor. We fixed the catalyst poison flow (old controller output) and performed a step test on the fluidized-bed level (new controller output) to get the pressure response. The pressure moved in the opposite direction to the expected response. The multi-hour step test failed to give us the data needed for the initial PID controller because of a disturbance that occurred during the test.

We turned to a simplified physics-based model to give us an approximate time constant and gain. This gave us confidence to repeat the step test. This time, the expected and measured results matched, and we implemented the new control scheme with substantial benefits to the company. I tell my students this experience to demonstrate that physics-based models provide another perspective to empirical methods and also provide fundamental insights. (Figure 2.)

Figure 2: Controller design based on a combination of physics-based models and empirical data provides fundamental insights potentially missed using only one method or the other.

Greg: How is model identification done and are controllers in manual?

John: Model identification with manual step response data is easy to understand but not desirable for running processes that need to remain in automatic control mode. Manual step, doublet, or PRBS tests are a good exercise for someone learning graphical fitting or regression methods for the first time. Closed-loop identification is now well-developed technology that facilitates assessment and retuning of MPC or PID controllers. Historical data can also be mined for periods where the controller has manual steps or there are setpoint changes in automatic mode. Model identification is only one part of assessing or improving control performance. A developing area is the use of reinforcement learning to adaptively quantify and improve controller performance.

Greg: What guidance is offered on using MPC versus standard feedback (PID) control?

John: The simplest solution that meets the control requirements is typically the best. PID controllers have been the default option because of well-supported configuration in standard industrial control systems. MPC is now a standard controller option in more systems, not just as an add-on through an external server with an OPC connection.

Figure 3: PID control is like a steering a car looking only in the rearview mirror. With MPC, however, the driver can also look ahead and make corrections that anticipate the effect of current moves on future outcomes.

Sometimes PID is insufficient for dealing with nonlinear or complex dynamics and MPC is required to anticipate future constraints or optimize within a range. A complex cascade or feedforward PID control network may also be replaced with a single MPC application to simplify the solution. MPC was formerly reserved for large and complex industrial refinery control applications but is now applied to many disciplines. There are many commercial and open-source MPC packages. An MPC package that I maintain is the Gekko Optimization Suite. It is downloaded about 10,000 times each month and users share control and optimization applications in publications and online through StackOverflow questions. I also teach an online course that starts each year in January for those who would like to know more about MPC with some exercises that compare PID, Linear MPC, and Nonlinear MPC. (See Figure 3.)

I like to describe the difference between PID and MPC with a driving analogy. With feedback control the driver is making adjustments to the steering angle while looking backwards to assess the position relative to the target lines. It is driving forward while only looking in the rearview mirror. Predictive control (MPC) allows that driver to also look ahead and make corrections that anticipate the effect of current moves on future outcomes. An inaccurate model distorts the view ahead and may lead to worse performance than with feedback control only. An accurate model gives a clear anticipation of future constraints and produces a reliable future move plan. (See Figure 4.)

Figure 4: While MPC can perform better that PID, an inaccurate model distorts the view ahead and may lead to worse performance than with feedback control only.

Greg: I have seen where experimental models (e.g., partial least squares and MPC models) have improved first principle models and vice versa. How is data science or machine learning enhanced by physics-based models used in process control?

John: Increased computational resources, data availability and machine learning methods have triggered a new era that is transforming industries. Self-driving cars, drone delivery, automated inspections and drilling automation and are just a few of the areas that have benefited from the intersection of data science and process control. Digitalization efforts in aggregation and cleansing are a foundational requirement for machine learning as companies such as Seeq, Element Analytics, OSIsoft, and others provide platforms. Other companies such as Imubit offer AI process optimization solutions for oil refineries and chemical plants.

Most of the major service providers are also leveraging data science and machine learning in new product offerings. Some potential customers are disillusioned with machine learning because it has been oversold or marketed with few technical details on the underlying engine. This problem can be alleviated with more in-depth comparisons using open-source tools (Scikit-learn, PyTorch, TensorFlow, Keras) and case studies from sites such as Kaggle. There are good online resources to get started with machine learning or learn about current progress.

One significant limitation is the ability to use physics-based or other a prior knowledge in the machine-learning algorithms. Combining physics-based elements with large training data sets expands potential applications and improves extrapolation potential. The hybrid approach has the potential to unlock new fundamental knowledge by enabling predictions built on physical principles that are informed by large data sets. We are working to add new ways to seamlessly combine machine learning and physics-based models through the Gekko Optimization Suite.

Greg: How is optimization used in automation solutions?

John: Optimization is the selection of the best solution among all possible feasible solutions. It is used in many parts of automation development including the initial design, model identification, and in some control algorithms. It is also used in supervisory applications to provide targets for underlying controllers and maximize production throughput. Reliable solvers are needed for online applications to ensure that a solution is obtained within the required cycle time. One way to improve the efficiency of the optimizer is to solve the equations and constraints simultaneously with the objective function instead of iteratively with a sequential approach. This requires gradient-based optimizers that can solve large-scale problems that exploit equation sparsity, use automatic differentiation to obtain derivatives, and an object-oriented modeling language framework.

Greg: What can be done to improve user and operator understanding and maintainability of MPC and optimization?

John: Operators need solutions that are intuitive and robust. A few years ago, I prepared an automation solution that was exactly what I would have wanted in an operator interface. The human-machine interface had many configuration options and details about how the program was operating. I was surprised when I presented it to the operator and he shook his head in disapproval. He said something to me that had a big influence on how I now deliver solutions: “You gave me a Cadillac and all I wanted was a Chevy pickup with a gas pedal, brake, and steering wheel.” I replaced the interface with a Go and Stop button and put more work into automatic selection of default options. I also created an engineering interface for drill-down explanations during troubleshooting. Most operators will use an automation solution if it makes their job easier and they can focus on higher-level objectives such as process improvements, handling edge cases or selecting the right donut.

10. I will convince y’all to add to valve and VFD articles and specifications, the application requirement for resolution, deadband, and 86% response time

9. I will convince y’all that minimizing valve pressure drop and VFD system pressure drop can cause a quick-opening installed flow characteristic and severe loss of rangeability

8. I will convince y’all that too much reset action is much more of a problem than too much gain action

7. I will convince y’all that rate action is needed in temperature loops

6. I will convince y’all that thermowell design and sensor fit, not sensor type is the biggest source of lag

5. I will convince y’all that RTDs can have an order of magnitude better accuracy and less drift than TCs

4. I will convince y’all that Coriolis meter is the only true mass flow meter not affected by composition and the order of magnitude greater accuracy and rangeability is often worth the investment

3. I will convince y’all that a slow process is good offering tight control if slowness is due to a large process time constant

2. I will convince y’all that digital values on HMI with a resolution greater than the measurement resolution are a distraction at best

1. I will convince y’all that 3 electrodes and middle signal selection should be used on all pH loops