By now, many process control practitioners are familiar with virtual machines and their advantages when it comes to the deployment and management of process automation applications. In essence, a virtual machine uses a piece of software called a hypervisor to abstract the application and its operating system from the details of the underlying computer hardware. Multiple application images, or guests, together with their requisite operating systems—even legacy ones no longer supported by their creator—can run on a single host server or PC, often substantially reducing hardware footprint while simultaneously extending an application’s useful life.

But it turns out that virtual machines (VM) were just another step in the continued convergence of operational technologies with those of the rapidly advancing IT world. Containerization and orchestration, next-generation virtualization concepts created to streamline the management of applications on cloud datacenters, are now inspiring new automation architectures that promise to ultimately liberate end-users from the painful and disruptive practice of wholesale digital control system migrations that have plagued the process industries for the past 30 years.

Containers aren’t VMs

Containers, or containerization, in many ways represents a more granular, lower-overhead approach to virtualization than the virtual machine. Multiple, lightweight containers—each of which includes application code packaged up with all of its dependencies, including runtime, system tools, system libraries and settings—plug into a container engine that sits atop the operating system infrastructure (Figure 1). In the Linux-based, open-source community, Docker is the most widely known container approach although a version of Docker for Microsoft Windows has also been developed.

Figure 1. Containers represent a more granular, lower overhead approach to virtualization than traditional virtual machines.

“Everything that an application needs to execute is wrapped up in its container,” explains Andrew Kling, senior director, system architecture and cybersecurity, Schneider Electric. “This creates a single, contained environment that's different than the container next to it.”

From a systems perspective, each of these “machines” functions individually, and can be connected through a network. “Containers are independent and, like standalone machines, can be selected and deployed as needed to a particular platform, giving you a great deal of flexibility,” Kling says. “And, as individual, closed containers, they also give you great security.”

Ralf Jeske, ABB product manager for control systems and field device engineering, likens software containers to the familiar intermodal shipping containers that are the backbone of global commerce. “These containers are characterized by standardized dimensions and hooks,” he explains. “They can contain anything and be transported and moved with compatible equipment, and there's a unique ID and an inventory list describing the content of the container.”

Airlines, too, have developed specialized containers and unit load devices for fast loading and unloading of airplane cargo. The container details may vary by airplane model (target operating system), but like software containers, they peacefully coexist and keep to themselves—regardless of their respective contents.

Similarly, the process automation industry has its own special needs when it comes to software containers, Jeske says. “They need to be able to contain a wide range of applications—such as for advanced process control, process optimization and asset management—and to exchange data via a standard interface such as OPC UA.”

Further, process automation containers need to be movable among locations and hosting computer hardware, Jeske says. And for larger operations, an orchestration tool such as Kubernetes (created by Google then released to the open source community) can be used to maintain, organize and manage one’s inventory of containers, automatically rebalancing computing loads according to resource availability.

Orchestration and partitioning

This ability of orchestration tools such as Kubernetes to actively work to restore what’s called a declarative distributed system configuration among containers allows improved system resilience, according to Harry Forbes, research director with the ARC Advisory Group. “Another important advantage of orchestration tools is the ability to readily roll back changes,” Forbes says. “If you make a mistake, reverting to an earlier version of the container is fast and straightforward.”

Strong partitioning among containers is another important differentiator relative to virtual machines, and in fact inspired their development and naming.

“Most system administrators or UNIX application developers are familiar with the concept of 'dependency hell'—making available all of the system resources in order for an application to run, and then coordinating the same as different applications are updated on a server machine,” says Tim Winter, chief technology officer for Machfu, a developer of Industrial IoT connectivity solutions.

“It can often be a tricky and tedious exercise to maintain multiple application dependencies across all applications that are provisioned to run on the same server,” Winter explains. “Containers allow each application to bundle a controlled set of dependencies with the application, so that these applications can independently have stable execution environments, partitioned and isolated from other containerized applications on the same server. Even application updates are often packaged and deployed as container updates for convenience. Thus, containers provide strong partitioning between application components on a target machine.”

Dennis Brandl, chief consultant with BR&L Consulting, adds that, “Many companies found that the cost and effort involved with implementing a physical machine was much higher than with a virtual one.” However, traditional VMs carried with them their own costs. “Sure, you may have one server running multiple applications, but you’re also running multiple virtualized operating systems as well as a hypervisor, which is itself a miniaturized operating system,” adds Brandl. “Bloat is a big issue, plus all of that software still has to be updated and managed.”

Hardware and software decoupled

Even as VMs made their namesake splash at the HMI and application server level, virtualization was starting to creep into other areas of process automation as well. The move to software configurable I/O, for example, allowed Honeywell Process Solutions to begin considering the broader possibilities afforded when software and hardware development are decoupled—not just at the server level, but for controllers and I/O as well, according to Jason Urso, vice president and chief technology officer.

“As we continue to deploy these newer technologies, the better we understand their power and capabilities—and it leads to other new ideas,” Urso says. One such evolution in Honeywell’s offering is the Experion LCN (ELCN) that effectively emulates the company’s aging TDC 3000 system as software, and promises “infinite longevity” of its customers’ intellectual property investments.

“It’s 100% binary compatible and interoperable with the old system,” said David Patin distinguished engineering associate – control systems, ExxonMobil Research & Engineering, at the Honeywell Users Group Symposium in June 2018, when the ELCN was unveiled to the public. “Current TDC code runs unmodified in this virtual environment, greatly reducing the technical risks. Intellectual property such as application code, databases and displays are preserved.”

Virtualization of the TDC environment has come with some added benefits, including the ability to use Honeywell’s cloud-based Open Virtual Engineering Platform to engineer TDC solutions; lower cost, smaller footprint training simulators; peer-to-peer integration of virtualized HPM controller nodes with current-generation C300/ACE nodes; and integration with ControlEdge and Unit Operations Controllers.

For its part, Honeywell has aggressively pursued its virtualization vision since then, launching at the 2019 user group meeting its Experion PKS HIVE, for Highly Integrated Virtual Environment. In short, the solution features virtualization and the decoupling of hardware/software dependencies at the application, controller and I/O levels.

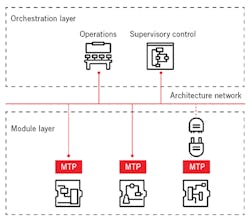

Another container instantiation making inroads in the process industry is the Module Type Package (MTP). MTPs are in essence containers created to ease the integration and automation of modular process plants via pre-automated modular units that can easily be added, arranged and adjusted according to production needs.

Consistent with a standardized methodology and framework, each MTP includes all necessary information to integrate itself into a modular plant, such as the communication services, a human machine interface (HMI) description and maintenance information.

In ABB’s MTP offering, for example, the company’s ABB Ability System 800xA operates the process and orchestrates the intelligent modules. An open-architecture backbone links the orchestration layer to the module layer with communication via OPC UA.

Modular enabled automation reduces cost, risk and schedule by eliminating non-standard interfaces; integrating an intelligent module into the orchestration system takes hours instead of days for a traditional package unit and skid integration.

“All type of projects today, from small to large scale, use more and more prefabricated skids and modules,” says ABB’s Jeske. “The ability to maintain a process automation container allows for more reuse as well as cheaper and faster plant build-up. Over time, packages—including some highly sophisticated packages and modules—may be leased or paid for by use.

“After a pilot phase, businesses can now start applying MTP in plants for expansions,” Jeske says. “And plans for new, ‘purely modular’ production lines are being made as we speak.”

Win-win for developers, users

At an industry level, process automation practice is moving along a path already traversed in adjacent industries, such as finance, telecommunications and healthcare, notes Schneider Electric’s Kling. “In an industrial control system within the process automation space, we're now picking up on that, taking their successes and applying them to our industry.”

“Today, we don’t always realize when we're using containers, like when we're interacting with cloud-based services,” Kling adds. “And applications that rely on cloud services may already be taking advantage of containers. Where we are going, those containers will move closer and closer to the edge, on-premise or to the cloud, or to embedded devices that live on the asset as well.

“We have seen these containers used in product-registration platforms and in historian-, engineering- or analysis-in-the-cloud applications. These systems have long been supported by companies like Microsoft, Red Hat and others because they're native to their cloud-based platforms.”

Project execution, in particular, has benefitted tremendously from container technology in that automation contractors can now build out entire virtualized control systems, test and verify them against virtualized models of the process, and only then downloaded to local hardware.

“That's compelling for us because in a big project doing this in the traditional way could take 18 months,” says Honeywell’s Urso. “We used to have armies of people working on physical boxes at some remote place. Now, we can have our people working on the project that's hosted in a cloud datacenter, in a set of virtual machines. And when it's complete, we copy the virtual machine to the physical equipment.”

“You'll never have to hit Setup.exe again!” exclaims Kling, who clearly has spent some time lugging DVDs (perhaps CDs and maybe floppies, too?) from operator station to operator station. “You're delivering a container with the application set up already, so that entire concept will disappear. Containerized software will also offer new levels of flexibility because you can build a repository of these templated, ‘ready to go’ applications and deploy them as needed.”

And because the container is outside the actual operating system, the OS can be upgraded without having a significant effect on the applications. “We're starting to see the different layers being separated,” says Kling. “The software and hardware that were built specifically to work together are becoming more independent, ultimately allowing the hardware, operating system and applications to all evolve independently.”

Upgrades and replacements will also be much less painful, notes BR&L's Brandl. “We may not be talking about a ‘no shutdown’ replacement. However, we are talking about a significantly faster changeover, and that’s a good thing from an end-user perspective.”

ARC's Forbes adds: “Software containers provide two major values to software developers and end users. First, an automated means to deploy and manage multiple distributed applications across any number of machines, physical or virtual. Second, a container software development process that creates a repository of ‘container images’—software deliverables that can be created collaboratively, and include the artifacts required for running an application within a specific machine environment.”

Container development, deployment and orchestration software tools have matured phenomenally during the last five to 10 years, Forbes continues. They now far surpass traditional embedded system software technology in their capability to deliver and manage distributed and high availability applications—such as the automation applications of tomorrow’s distributed control systems. “This is why the effective use of container deployment and orchestration software is likely to be a critical success factor for future process automation system,” Forbes says.

Do containers = open?

Figure 2. Among the first process automation-specific containers, MTPs are intended to ease the integration of modular process plants

With the notable emergence of leading open-source, Linux-based container and orchestration options, it’s fair to ask whether such technologies will advance the cause of the Open Process Automation Forum (OPAF) to identify a path toward interoperable, plug-and-play process control.

The short answer? It depends.

“The use of containers allows the abstraction of applications from a hardware or the execution of the same application in different hardware depending on the particulars of the installation,” notes Luis Duran, ABB global product line manager, safety. “If the interfaces are properly defined, containerization could enable the portability of applications or interoperability of different technologies—potentially even technologies from different suppliers.

“Containerization also allows a manufacturer to protect intellectual property and domain knowledge as well as maintain productivity even during technology upgrades,” Duran continues. “And since hardware is abstracted from application, one can envision scenarios in which the application is transferred to a more robust platform with minimum downtime.”

Brandl notes that while there are real-time versions of Linux available, there currently are no “real-time,” open-source containerization/orchestration schemes suitable for deterministic control tasks. “It probably won’t be led by one of the majors, but it would be great if some of the second- and third-tier automation companies came together to create a real-time Dockers and Kubernetes implementation.”

Schneider's Kling predicts that the move to a container-based realization of the OPA vision will begin with standards such as IEC 61499, which supports application-centric automation design independent of the underlying hardware devices.

“IEC 61499 features an event-driven model built around function blocks, which solves the problem of ensuring portability, configurability and interoperability across vendors and, at the same time, software and hardware independence,” explains Kling. “This standard allows us to develop containers independently that will function across platforms. Companies will continue to build packages that best suit their offers, but the software will become increasingly interoperable because of standards like IEC 61499.”

So, while containerization and orchestration technologies can advance interoperability and openness, it’s also clear that virtualization strategies can be leveraged and advanced in a proprietary fashion, too. ARC’s Forbes recognizes this orthogonality, but believes that containerization and orchestration need to be part of industry’s approach if it is to liberate its intellectual property from “control languages and idioms that often are not machine readable and are always highly proprietary.

“Standardized container and orchestration tools offer an exit from this dead end.”

About the Author

Keith Larson

Group Publisher

Keith Larson is group publisher responsible for Endeavor Business Media's Industrial Processing group, including Automation World, Chemical Processing, Control, Control Design, Food Processing, Pharma Manufacturing, Plastics Machinery & Manufacturing, Processing and The Journal.

Leaders relevant to this article: