Why do we make operational mistakes that can lead to accidents? Certainly, operators make mistakes simply due to error, such as opening or closing the wrong valve. In many cases, however, operators make poor decisions based on the information provided to them at the time. A common refrain is that, “It made sense at the time.”

In other words, operators’ decisions and actions are largely based on their situational awareness of what's going on in the process at the time, what immediately preceded it, and what they expect will happen in the near future. Supervisors and managers, too, are not immune to mental errors that can lead to faulty situational awareness, resulting in poor decisions and actions.

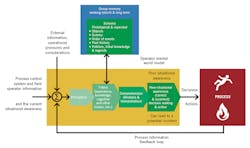

Operational situational awareness is illustrated in Figure 1, where we have a collection of information from various sources (process control systems, field operators, supervisors, etc.) that the operator must organize, analyze, and generally make sense of to determine what's happening in the process.1 It should be noted that the concept of poor situational awareness leading to poor decision-making can apply across the board to individuals, groups and organizations, and affect activities and practices in the realms of process safety, engineering and maintenance.

Figure 1: Accurate situational awareness relies on the operator being able to organize and analyze data from various information sources to understand what is happening in the process.

If we don't have the correct situational awareness, we can make bad decisions, potentially leading to an operational upset, poor product quality, or in the worst cases, a hazardous incident or accident. The operators must interpret what and why the process is doing what it's doing by evaluating and analyzing the information that is presented them by the control system, field operators and other sources.

Situational awareness can be considered as the sum of an operator’s perception and comprehension of the process information being provided. This allows him to update his process worldview, and make projections about the system’s current state (and near future states), and on this basis, make decisions or take actions.1

But a funny thing happens on the way to the worldview. The information is filtered and manipulated by experience, training and cognitive biases (preconceptions, expectations and interpretations), which lead in the best case to critical and valid analysis, and in the worst case to distorted situational awareness. This filtering, even with the best of information, can sometimes lead to a distorted or incorrect worldview and then to an accident. A situational awareness model is illustrated in Figure 2.

Fortunately, most of the time, the operator’s situational awareness is correct or accurate enough to avoid poor decision-making. If not, we'd see a lot more incidents and accidents. Still, operators don't always get it right for various reasons, including cognitive bias.

A cognitive bias can be defined as the way a person understands events, facts and other people that's based on their own set of beliefs and experiences. It may even appear to be rational, but can get in the way of logical thinking and analysis, so the end result is neither reasonable nor accurate. Here’s another cognitive bias definition that the author is partial to: “a systematic error in thinking that impacts one's choices and judgments.”3 Cognitive biases can lead to an incorrect situational awareness that can distort our thinking, deceive us into making wrong conclusions, decisions and actions from the information provided us. When applied to the information supplied to the operator, cognitive bias can affect the plant operation in a negative way. But in some cases, the effect can be positive, such as when the resulting actions lead to a safer state, e.g. more conservative operational actions.

Figure 2: Cognitive biases are among the filters that can distort an operator's situational awareness and lead to poor decision-making.

Sources of bias

Illogical thinking—even the best of us can sometimes be subject to illogical thinking. This can be caused by a mental slip or mistake, or external problems. The key is to come to work with our game faces on, realizing the importance of our work and the consequences of doing a poor job. Hazardous processes are seldom forgiving.

Confirmation bias. Probably one of the most common cognitive biases, confirmation bias is essentially that one tends to see what one wants or expects to see. Confirmation bias is the tendency to search for, interpret or recall information in a way that confirms one's beliefs or hypotheses. With confirmation bias, people tend to unconsciously select information that supports their views and ignore non-supportive information. People also tend to interpret ambiguous evidence as supporting their existing position. This can lead operators to ignore important process information that doesn't support their current process worldview.

Framing effect. The framing effect bias is a facilitated cognitive bias that occurs when one’s decisions are swayed depending on how information is presented through data quantity and presentation (emphasis, style, colors, fonts, size, etc.), hierarchical order, lack of appropriate information when needed, etc. This can occur when information is presented by the control system in a way that can distort the operator's perspective of what's going on or limit their knowledge. This is typically a system design error. Common examples of this are when operators are overloaded during an alarm flood or access to needed information is difficult.

Cum hoc ergo propter hoc. Latin for "with this, therefore because of this,” the correlation/causation cognitive bias is when two or more independent events appear to be correlated, such as process variables that track each other. The operator may make the assumption that one event causes another, when in reality something else is the cause of the correlation or the reverse is true (e.g. the assumed cause and effect is reversed).

This can occur during an event or more commonly occurs when operators make assumptions based on their operational experience as to how the process works. Today’s new operators tend to have more education in chemistry and process unit operations, but this education is typically incomplete, leaving them to fill in the blanks with hands-on experience, training and what they learn from other operators. This can lead to a mental worldview with incorrect views of what causes what in the process.

Another version of this cognitive bias is called post hoc ergo propter hoc, which is Latin for “after this, therefore because of this.” In other words, "since event Y followed event X, then event Y must have been caused by event X."

Selective attention bias. Also called the “illusion of attention,” this is when a person strongly concentrates on one thing to the exclusion of other things they should have caught. This bias can even affect subject matter experts. Examples have been reported in the medical field, involving experienced radiologists who, when looking for a particular diagnosis on X-rays, missed obvious, serious problems not related to the looked-for diagnosis.4

Strong concentration during a developing event is normal, but can sometimes exclude important and relevant information because of the concentration in one area. Under stress, tension and pressure—all the things that can potentially occur during a developing hazardous event—there can be a narrowing of focus, which can lead to selective attention bias coming into play. The ability to step back and look at the bigger picture or look at alternatives can help counteract this effect. A well-known example illustrating this bias is discussed in the book, The invisible gorilla and other ways our intuitions deceive us.4 A video of this bias can be found at https://youtu.be/vJG698U2Mvo. The opposite of selective attention bias is lack of attention, facilitated by work overload or operational distractions.

Anchoring bias. Anchoring bias describes a tendency to rely too heavily on the first piece of information offered to us (the “anchor”) when making decisions. The operator can sometimes be sent down a rabbit hole or shoot from the hip, rather than take some time to analyze the situation. Fast reactions are sometimes needed in emergency conditions and should be based on trained-in response, but most of the time, taking a little time to think about the situation can lead to better decisions and actions.

The Dunning-Kruger Effect. This cognitive bias describes situations in which people overestimate their ability to do something or their knowledge about a certain subject. Good operators are typically confident operators, but know their limits. However, there are always people who overestimate their knowledge or abilities. This can lead to operators not asking for help when they need to or taking unnecessary risks.

Group think. This describes the situation in which people in a group come to the wrong conclusion because they think all the other people believe or want to do something when none of them actually do. This can be a problem when a group of operational personnel come to conclusions regarding why something is happening or, more commonly, what to do about it. They come to a bad decision because they think the other people believe something when they really don’t. The sum of the parts simply don't always add up to the whole.

An example of group think is provided in a story called “the trip to Abilene” (https://psychologenie.com/explanation-of-abilene-paradox-with-examples).

Bandwagon effect. The bandwagon effect is a cognitive bias that occurs when people place a greater value on conformity than expressing (or having) their own opinions, which can distort the situational awareness formed by a group. This typically occurs when there are dominant personalities, politics or dysfunctional group dynamics in play.

Argumentum ad verecundiam. Latin for “appeal to authority,” this is a cognitive bias that deems an assertion true because of the position or authority of the person asserting it. This commonly occurs when there is dominant person, supervisor or manager, who drives the apparent but potentially incorrect situational awareness without considering alternatives.

A side effect of this can be a facilitated cognitive bias, when upper-level managers are present during an event, or when they create a punitive operational environment. This can raise the stress level on operators to do the “right” thing, which can lead to operators taking an action that they think will be agreeable to management or the failure to go to a safe state due to pressures not to do so.

Experience and training. Experience and training are typically good filters for reaching correct situational awareness. In some cases, however, experience can lead us to an incorrect conclusion that doesn't match the situation. For example, an operator may call up from memory a previous case or schema that appears to fit the current situation—but doesn’t. The recalled case may be folkloric (an errant story from another operator) or legendary (based on historical information, but suffering distortion due to mistranslation or the passage of time).

In other situations, the case connection may appear to be correct, but is actually mismatched.1 Examples include reasoning by analogy when the analogy doesn't fully apply or an incorrect cause-and-effect conclusion based on previous experience.

Poor training can also lead to incorrect reasoning if incorrect folklore, legends or incorrect cause-and-effect connections are passed through by a trainer speaking from experience and not scientific or engineering facts. This typically occurs during on-the-job training.

Favorite source of information. This bias can occur when an operator favors a group of instruments over others, and sometimes ignores the others’ data. This is a matter of trust, and can occur when an instrument has caused problems in the past.

The author experienced a real-life example of this when a plant got a new-technology total carbon analyzer. It had teething problems for about three months, but was eventually fixed. Despite the fact that it was operating correctly and reliably, the operators wouldn't trust the instrument, and it was eventually taken out of service and replaced with a different technology.

Correcting for biases

The first defense against cognitive biases is to be aware that they are there, that our logic may not always be flawless. The education of operators regarding cognitive bias is a first step. They should be educated regarding situational awareness and what can affect it, how to verify it, and how cognitive biases can affect it.

Situational awareness can be improved if the framing of the process control system information is improved. The design of operator displays should be reviewed by operators and with simulations based on normal operations, process upsets, emergency conditions and HAZOP/LOPA hazardous scenarios to see if the correct situational awareness is easily obtained by the operators from the process control system displays. After incidents or negative events—even with positive outcomes—operators should be interviewed to see how their situational awareness played out during the event or incident. Negative and positive situational awareness results can then be quantified, and should be treated as lessons learned and used in future designs and training.

Reliable instrumentation can go a long way toward accurate situational awareness. The operators and the maintenance technicians may have completely different views about the reliability and quality of a unit’s instruments. These differences should be resolved to help improve the operators' trust in the instruments. The old saying “trust but verify” also applies. The ability to verify questionable measurements is icing on the cake, and can reduce operators’ selective reliance on particular instruments to the exclusion of others, develop good field instrument feedback, and help spot instrumentation problems.

While some companies have reduced their operations personnel to a single operator for a section of the plant, having the ability and knowledge of other experienced operators as well an experienced shift foreman to work together as a team can help improve overall operational situational awareness. While some companies use the operational or process engineer as a training position for supervision and management, having one on hand can be a big help.

Training and experience typically leads to operators with better situational awareness. Train them to step back and look at the big picture and to work effectively under stress. Further, teach supervisors and managers not to make decisions that override experienced operators, unless there are clear technical reasons to do so.

Strong operational discipline is also key to helping overcome cognitive biases. A structured approach to decision-making can minimize potential errors due to an incorrect situational awareness.

An ongoing process

Cognitive biases can have a negative effect on an operator’s situational awareness. To improve operations and make our plants safer, cognitive biases and other things that adversely affect their decision-making must be recognized and improved upon. This process in ongoing, and can't be started too soon.

All negative events, incidents, near misses and accidents should be followed by an evaluation of the operator’s situational awareness during the event—both good and bad aspects—so that lessons learned can be developed, and operational procedures and control system information presentation can be improved. When significant operator errors are discovered, even without a negative outcome, a root-cause analysis (not a witch hunt) should be done in the spirit of improvement.

References

- “Why bad things happen to good people,” William L. Mostia, Jr. P.E., Mary Kay O'Connor Process Safety Center, 2009 International Safety Symposium, Journal of Loss Prevention in the Process Industries 23, 2010, pages 799-805

- “Team situational awareness for process control safety and performance,” David B. Kaber and Mica R. Endsley, Process Safety Progress, Vol. 17, No. 1, Spring 1998

- “What is cognitive bias? Definition and examples,” http://www.thoughtco.com/cognitive-bias-definition-examples-4177684

- The invisible gorilla and other ways our intuitions deceive us, Christopher Chabris and Daniel Simons, Random House, 2010

Frequent contributor William (Bill) Mostia, Jr., P.E., principal, WLM Engineering, can be reached at [email protected].

Latest from Asset Management

Leaders relevant to this article: