Safety and security: Two sides of the same coin

In many languages, there is only one word for safety and security. In German, for example, the word is 'Sicherheit,' in Spanish it is 'seguridad', in French it is 'sécurité' and in Italian it is 'sicurezza.'

According to Merriam-Webster, the primary definition of safety is "the condition of being free from harm or risk," which is essentially the same as the primary definition of security, which is "the quality or state of being free from danger." However, there is another definition for security; that is, "measures taken to guard against espionage or sabotage, crime, attack or escape," and this is generally the definition we are using when we refer to industrial security.

Using these definitions, we can better understand the relationship between safety and security. The relationship is such that a weakness in security creates increased risk, which in turn creates a decrease in safety. So safety and security are directly proportional, but are both inversely proportional to risk. While this may all seem elementary, understanding the relationship between safety and security is very important to understanding how to integrate the two. Those that own and operate industrial facilities, especially those that many governments have defined as critical infrastructures, certainly understand the meaning and importance of safety and security relative to their operations.

In the context of industrial automation and control systems, safety systems are special control systems whose function is to detect a hazardous condition and take action (typically shut down the process) to prevent a hazard. They are typically one of many layers of defense in an overall protection scheme for the facility. Whereas, control system security refers to the capability of a control system to provide adequate confidence that unauthorized persons and systems can neither modify the software and its data nor gain access to the system functions, and yet to ensure that this is not denied to authorized persons and systems.

Until recently, the engineering disciplines of safety system design and control system security were effectively on separate, but parallel paths. Safety standards and associated engineering work practices are mature and well-established, based on decades of learning. On the other hand, control system security is a much newer field and has its roots in information system or IT security. Some say control system security is where safety system engineering was about 10 years ago.

So why is there sudden interest in integrating safety system and control system security disciplines? One reason is that safety integrated systems (SIS), once totally isolated, are increasingly becoming connected to or integrated with process control systems that connect to the outside world. This is a significant because a security breach of a SIS could directly prevent the SIS from performing its intended protection function, which could lead directly to a catastrophic event. On the other hand, a security incident in a control system, while still having the potent to be very damaging, should be limited to causing a process shutdown because the SIS is there to prevent a dangerous situation, provided it was designed properly and was not also compromised. The integration of control and safety systems raises significant concerns about the possibility of a common security vulnerability affecting both systems.

Another reason for the sudden interest is a growing recognition of the many similarities between the safety and security life cycles, and that there are improvements and efficiencies to be gained by combining the two approaches. By addressing both safety and security fundamentally from the beginning, asset owners will be able to head off the need to perform a second costly process later to find and address security vulnerabilities.

This interaction between the safety of a critical system and security became painfully obvious to the owners of the Hatch Nuclear facility in March of 2008. According to data supplied by the Repository of Industrial Security Incidents (www.securityincidents.org), the Hatch Nuclear Power Plant near Baxley, Ga., was forced to shut down for 48 hours after a contractor updated software on a computer that was on the plant's business network. The computer was used to monitor chemical and diagnostic data from one of the facility's primary control systems. The software was designed to synchronize data on both systems. When the updated computer rebooted, it reset the data on the control system, causing the safety system to interpret the lack of data as a drop in water reservoirs that cool the plant's radioactive nuclear rods. The safety system behaved as designed and triggered a shutdown. The engineer was not aware that the control system would be synchronized as well or that a reboot would reset the control system.

The remainder of this article will present an approach to merge the front-end of the safety and security life cycles to demonstrate the possibility and the benefits of taking an integrated approach to safety and security, especially when designing a new or retrofitting an existing system. While the authors believe it is also possible to merge subsequent phases of the safety and security life cycles, it is beyond the scope of this article to cover the latter phases. Additionally, we feel the greatest similarities are in the front end of the processes, and integrating the processes up-front will provide the greatest benefit throughout the process.

Let's start by taking a look at the life-cycle models for safety system engineering and control system security. The safety life-cycle model from IEC 61511 (also ANSI/ISA S84) has three main phases; Analysis, Realization and Operation. The security level life-cycle model from ANSI/ISA S99.00.01-2007 also has three main phases; Assess, Develop, and Implement and Maintain.

The Safety Analysis and the Security Assess phases have the most similarity by far because, in both cases, the purpose of this phase is to determine the amount of risk present and decide if it is within tolerable limits for the facility. Determining the amount of risk involves identifying the consequences (what could happen and how bad would it be?) and the likelihood of it occurring (how it could happen and how likely it is to happen?).

A typical first step in this process is the hazard and operability analysis or HAZOP. A HAZOP, the most widely used method of hazard analysis in the process industries, is a methodology for identifying and dealing with potential problems in processes, particularly those which would create a hazardous situation or a severe impairment of the process. A HAZOP team, consisting of specialists in the design, operation and maintenance of the process, analyzes the process and determines possible deviations, feasible causes and likely consequences. It is important that the industrial automation and control system (IACS) be listed as a cause if failure of the IACS or unauthorized access could initiate a deviation.

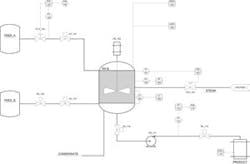

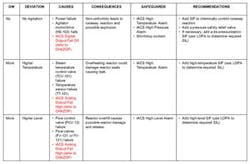

Figure 1 shows the piping and instrumentation diagram (P&ID) for a simple chemical reactor. A portion of an example HAZOP for the process is shown in Figure 2. The text in red highlights the IACS-related causes.

Unfortunately, other than identifying the IACS as a potential cause, a HAZOP doesn't study the details of IACS deviations, which is an important step, especially given the size and complexity of modern control systems. An increasingly popular solution to this problem is a special version of a HAZOP called a control hazards and operability analysis (CHAZOP), which takes the next step in understanding the details of IACS hazards. Another technique is a failure modes and effect analysis (FMEA). Both techniques identify causes and consequences of control system failures. The CHAZOP technique extends the concept of deviations and guidewords from HAZOP, extending the list of guidewords for IACS specific types of deviations. The FMEA process takes a more hardware-centric approach by systematically studying the failure modes of each component and the effects on the system. Either technique is acceptable. However, regardless of the technique selected, it is important to include security deviations or failure modes in the analysis.

Figure 3 shows a portion of an example CHAZOP that looks deeper into possible causes of the IACS deviations. In this example, the red text highlights the security-related deviations.

Upon completion of the HAZOP and CHAZOP or FMEA, we should have identified all of the causes of IACS failure, including security failures, and the consequences of those failures. However, we still have not determined the likelihood of these events occurring, which is a necessary step in quantifying the risk, as risk is the product of likelihood and consequence.

Estimating likelihood, particularly for security, can be a difficult task because it can be very difficult to estimate the skill and determination of an attacker. We can simplify the task by filtering the list to include only those with intolerable consequences (e.g., have the potential to cause injury, death, significant downtime and environmental or major equipment damage). There are a variety of techniques available, including risk matrices and risk graphs. However, in safety, one of the most popular techniques for estimating likelihood is layer-of-protection analysis (LOPA). Part of the reason for its popularity is that it gives the user credit for employing a layer- of-protection strategy to mitigating risk. The security field uses the term defense-in-depth to describe a very similar concept. Yet, even though the terminology is different, the LOPA technique can definitely apply to security threats.

Having completed the LOPA or other method of estimating the likelihood, the final step in the front-end of the combined safety-and-security life cycle is to compare the results with facility or corporate tolerable risk guidelines and to document the results. Whenever the estimated risk exceeds the tolerable risk, there is work to be done. That work is covered in the subsequent phases of the combined life cycle and will be discussed in the subsequent articles. However the following case study from a major U.S. refinery illustrates the analysis phase and subsequent develop/implement phase.

This particular refinery was concerned about the safety, security and reliability of its safety systems (and thus the safety, security and reliability of the entire facility). To start, a team conducted a security risk analysis of all control systems, expanding on existing safety HAZOP studies. The information on this was used to drive a FMEA that clearly showed possible common mode failures of the control and safety systems due to either accidental network traffic storms or deliberate denial-of-service (DoS) attacks. While the safety systems would fail in a "safe" manner, even under attack, it was clear that the consequences would be a significant (and thus expensive) plant outage. As well, the probability of occurrence was estimated to be high since the skill level needed to drive such an attack was close to zero (and in fact could be caused accidentally), the attractiveness of the site to possible criminal or terrorist groups was high, and the layers of protection were limited.

The lack of clearly defined security layers drove the decision to adopt a zone-and-conduit model as defined in ANSI/ISA99.02.01 as the solution to the realization/addressing phase. The plant business network, the management network, the basic control system, the supervisory (operator) system and the safety system were each defined as a separate security zone (See Figure 4). Between these, approved "conduits" were defined for inter-zone communications. For example, only the Supervisory Zone was permitted to have direct connection to the rest of the Business Network Zone, while the Safety Zone had only a single approved conduit to the Supervisory Zone (for additional information on the use zone and conduit models see http://www.tofinosecurity.com/ansi-isa99).

Once the zone and conduits were defined, the technical aspects of the solution began. The engineering team defined appropriate safety/security controls on each of the conduits to regulate inter-zone traffic. For example, to manage all network traffic into the Safety Zone from the Supervisory Zone, Tofino industrial firewalls were placed between the two zones. These specially designed security devices are hardened for industrial environments and engineered specifically to manage MODBUS/TCP traffic, the particular network protocol used to communicate to the SIS. They were also designed to be transparent to the network on start-up so they would not disrupt refinery operations while being commissioned. Similar firewalls were also placed between the Basic Control Zone and the Supervisory Zone.

It is interesting that, while the firewalls were being installed, the engineering team noted that Windows PCs on the Supervisory network were generating a significant number of "multicast" messages on the safety and control network, even though they were of no use to the SIS controllers. Messages like these were a significant factor in another famous nuclear safety incident that occurred at Alabama's Brown's Ferry reactor in August 2003, where network traffic caused a redundant cooling water system to fail. This time the firewalls were configured to have these nuisance messages silently dropped from the network as they entered the Safety Zone. Two years later, the safety system has operated without incident, despite numerous changes to the networks around it.

While it is easy to get caught up in IT view of security and think only of hackers and viruses, for the operator of a hydrocarbon processing facility, security is much more. Security is about maintaining the reliability and safety of the entire system. As a result, the security of process systems can be significantly improved with a coordinated approach to both safety and security, starting with the initial analysis. By following a well-defined process, this analysis phase can be both cost-effective and significantly improve the reliability of the entire processing facility, providing an excellent return on investment.

About the authors

John Cusimano is director of exida's security services division.

Eric Byres is the Chief Technology Officer of Byres Security Inc.

About the Author

Leaders relevant to this article: